When we look at AI usage, we see employees interacting with dozens of AI tools every month. Not because they are ignoring policy, but because AI is now embedded in how work gets done. Writing. Coding. Research. Analysis.

This gap between what organizations think is in use (ChatGPT. Maybe Claude. A code assistant or two, …) and what is actually in use is where Shadow AI lives. And at scale, it becomes a security problem.

The Browser: A Leading Indicator of AI Sprawl

AI expansion inside organizations happens faster than most leaders realize. Even when looking at just one layer of the environment, the browser, the scale of adoption is striking.

Prompt Security data shows that organizations with 200–1,000 employees interact with an average of 45 distinct AI websites per month. In larger organizations (1,000–5,000 employees), that number climbs to 72 different AI sites monthly.

And that’s only one surface.

Beyond the browser, Prompt Security also monitors AI code assistants embedded in IDEs, desktop AI applications, AI features inside SaaS platforms, MCP-connected services, and internally built AI systems. Prompt Security’s MCP Gateway actively scans 12,304 publicly available MCP servers on GitHub.

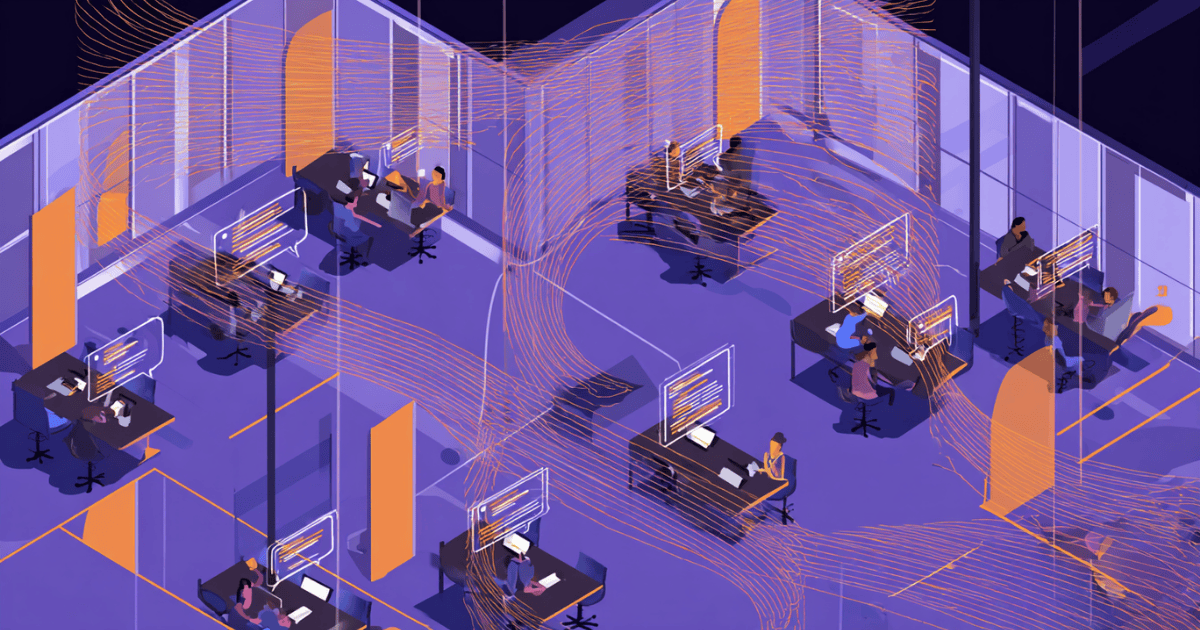

Viewed together, these layers reveal not isolated experimentation, but broad, decentralized AI sprawl across the enterprise.

Why Shadow AI Emerges From Everyday Work

Most AI usage is practical, not experimental.

Employees use AI to draft content, summarize documents, analyze data, and troubleshoot code. They move between tools based on task fit and convenience.

This behavior is normal. It is also difficult to govern without visibility.

Shadow AI does not emerge because employees want to bypass security. It emerges because AI tools are easy to access and immediately useful.

How AI Prompt Violations Accumulate at Scale

Across organizations, 1.6% of AI prompts contain a policy violation.

Most violations involve sensitive data. PII. Credentials. Internal or confidential information shared with an AI tool.

On its own, 1.6% sounds manageable. At scale, it is not.

In a 100 person company where each employee writes just 10 prompts per day, that rate results in 180 prompts containing policy violations every day (leaked API keys, email addresses, company secrets, social security numbers, and a very long etcetera)

When sensitive data is shared with licensed, well-known tools, it’s not ideal, but it’s a manageable risk. When that same data is entered into unknown sites with unclear privacy and data handling policies, the risk changes. If it ends up in a training dataset, there’s no undo button.

Why Traditional Security Controls Miss Shadow AI

Most security programs were not designed for this pattern of activity.

Common gaps include:

- AI policies that assume a small, static list of tools

- Controls that focus on access instead of prompt content

- Violations treated as isolated incidents instead of ongoing signals

AI risk is not tied to a single model or vendor. It emerges from thousands of small interactions across many tools.

Without real time visibility and enforcement, Shadow AI remains invisible until data leaves the organization.

What Effective AI Governance Requires at Scale

AI usage will continue to expand. More tools. More prompts. More sensitive data moving through external systems.

The goal is not to stop employees from using AI. It is to make usage visible, apply policy consistently, and reduce risk without slowing work down.

At scale, even small percentages matter.

Prompt Security exists to help organizations see and control Shadow AI as it actually operates in real environments.

To see how Prompt Security gives real-time visibility and control over Shadow AI in your environment, schedule a demo with our team today.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)