AI Risks & Vulnerabilities

AI introduces a new array of security risks, and we would know. As core members of the team that built the OWASP Top 10 for LLM Apps, we have unique insights into how AI is changing the cybersecurity landscape.

Top AI Risks & Vulnerabilities

Click on each of these AI Risks and Vulnerabilities to learn more about them and how to effectively mitigate them.

Time to see for yourself

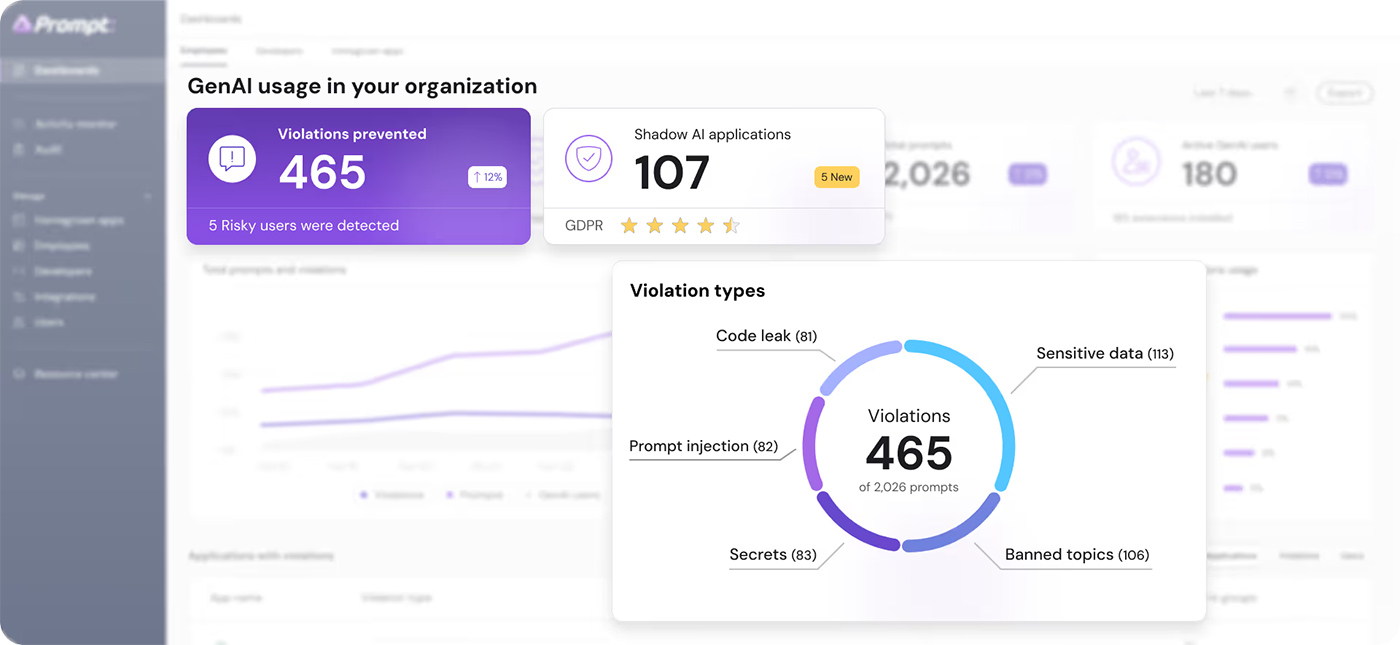

See how organizations are securely enabling AI with

Prompt Security