Public discussion around Clawdbot has focused heavily on visible outcomes. Automated inbox cleanup, calendar management, and rapid task execution are presented as evidence of immediate value. These examples have driven attention and accelerated adoption. After all, delegating that kind of work is an easy sell.

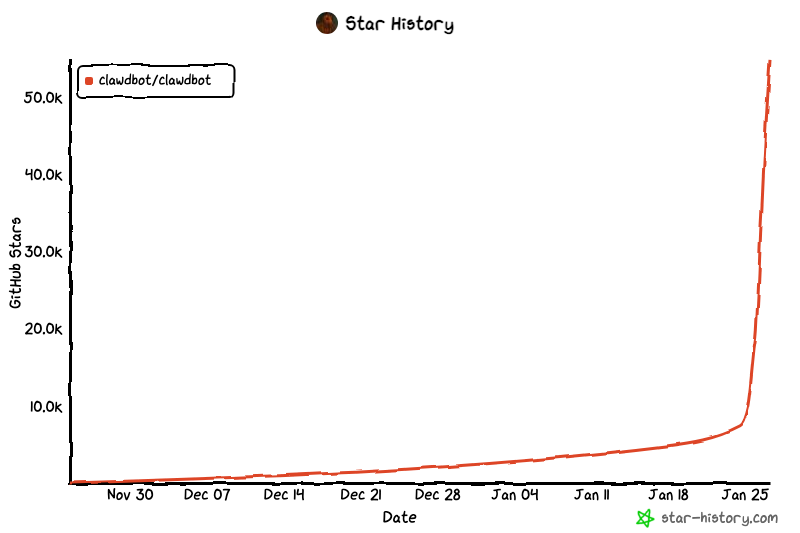

That attention is visible in usage signals as well. Within a day of going viral, Clawdbot’s GitHub repository surpassed 20,000 stars, doubling overnight, as interest translated into hands-on experimentation with autonomous agents (source).

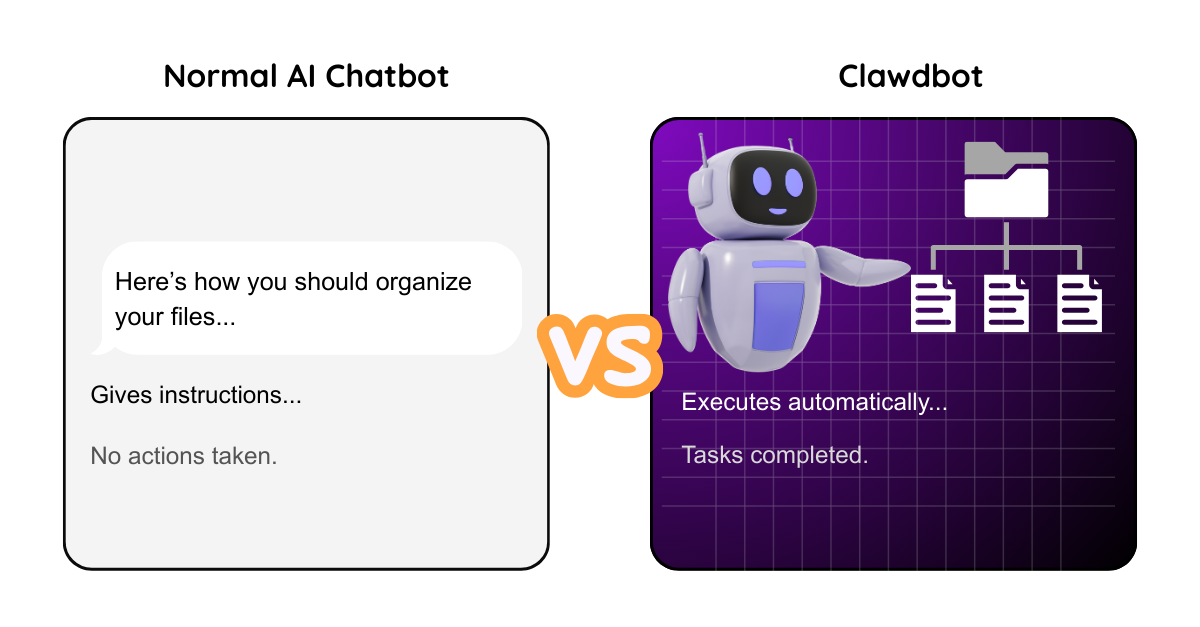

That momentum, however, has not been matched by careful evaluation. Clawdbot operates as an agentic system, capable of observing, deciding, and acting autonomously across personal workflows. Systems with this level of autonomy behave differently than traditional automation, and they tend to surface questions about control, risk, and responsibility much earlier than expected. Understanding what it means to introduce an always-on agent matters more than reacting to surface-level visibility or perceived urgency.

This is a dynamic we have seen repeatedly as AI systems move from assisting users to acting on their behalf.

Persistent System Access Creates Exposure

Once deployed, Clawdbot operates continuously and executes actions without ongoing user input. Its access can extend across server operations, file systems, messaging platforms, scheduling tools, and web activity.

Consolidating this level of access into a single automated system changes how risk accumulates. Decisions that would normally involve human judgment are instead handled automatically and often invisibly. Without clearly defined boundaries, even well-intentioned automation can behave in unexpected ways.

As autonomy increases, the cost of unclear controls increases with it. In agentic systems with persistent access, small inputs or background tasks can propagate across systems before a human has an opportunity to intervene. This is not unique to Clawdbot. It reflects a broader pattern we see whenever execution is delegated without strong guardrails.

For example, when agents are granted broad execution privileges without clear boundaries, inputs that appear informational can shape how the agent schedules background tasks or extends its behavior over time, sometimes without an explicit request or a clear moment for user approval.

Prompt Injection in Agentic Systems

When autonomous agents process untrusted input while retaining execution privileges, control boundaries become porous.

Much of the discussion around prompt injection has focused on chatbots and other LLM-powered applications, where the primary concerns are output quality, data disclosure, or inappropriate responses. Clawdbot, like other agentic systems, changes the nature of the problem. When an agent can act within personal or organizational systems, untrusted input no longer influences only what the model says. It influences what the system does.

In these environments, prompt injection is less about manipulating responses and more about shaping behavior. Inputs that appear routine can affect downstream decisions in ways that are difficult to observe or audit, particularly when agents operate continuously and across multiple tools.

This is why prompt injection persists as a structural risk in large language model systems. It is not a flaw that can be fully patched away. It is a consequence of how agentic systems reason over and act on input.

Usage-Based Costs Accumulate Quickly

Public discussion around Clawdbot often emphasizes the low cost of standing up the required infrastructure while minimizing the impact of ongoing usage-based expenses.

Claude Opus pricing is structured around token consumption, with input tokens priced at $5 per million and output tokens priced at $25 per million. Autonomous agents like Clawdbot generate usage continuously through background activity such as research, inbox processing, and web interaction.

As task complexity increases, costs scale with it. Rate limits and usage caps introduce friction precisely when agents are expected to operate independently. This gap between perceived and actual cost is another place where agentic systems behave differently than interactive tools.

Deployment Requires Operational Competence

Clawdbot functions as infrastructure rather than a consumer-ready product. Deploying it safely assumes familiarity with operational and security fundamentals.

This includes provisioning and maintaining a Linux server, configuring security controls, managing API authentication, handling tokens and permissions, defining command allowlists, and enforcing sandbox boundaries. These decisions determine how much authority an agent has and how failures are contained.

The strongest outcomes tend to come from users who already understand these tradeoffs. This is consistent with what we have seen across agentic systems more broadly. As autonomy increases, operational discipline becomes more important, not less.

Installation Is Easier Than Safe Deployment

Most publicly shared setup guides for Clawdbot focus on installation and initial responsiveness. They demonstrate that an agent can be made to run, but they often stop short of what is required to run it safely.

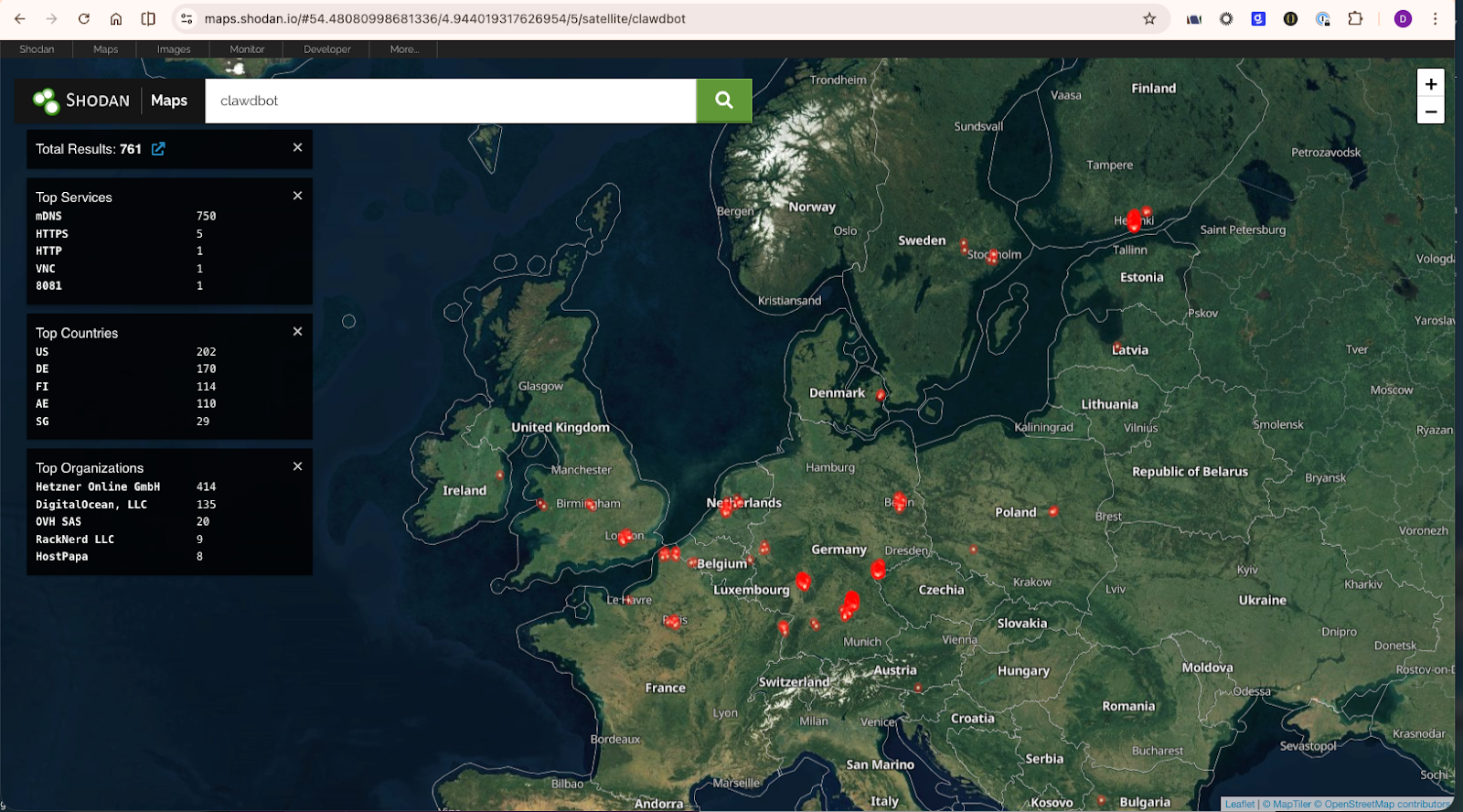

Controls such as pairing approvals, security audits, dependency management, sandbox configuration, and token scoping are frequently treated as secondary concerns, if treated at all. In practice, skipping these steps does not just leave an agent loosely configured. It effectively places an always-on system with broad access into a hostile environment without meaningful guardrails.

The gap between a working installation and a secure deployment is not just a matter of polish. It is the difference between a constrained assistant and an exposed system advertising its presence to the public internet. That distinction is easy to underestimate until something goes wrong.

A Familiar Adoption Pattern

New AI tooling often follows a familiar path. Early examples highlight impressive capability. Over time, operational constraints surface and expectations adjust.

Clawdbot fits into this pattern. Its technical capability is clear, but its practical usefulness depends on user experience, tolerance for operational overhead, and clarity around acceptable risk. Public examples tend to reflect successful configurations, while less visible outcomes fade from view.

This pattern is not a failure. It is a normal part of how new system classes mature.

Deployment Guidance

Clawdbot highlights a broader reality about agentic systems: successful use tends to correlate with operational familiarity. Users who are comfortable with terminal environments, API authentication, and system configuration are better positioned to reason about the tradeoffs involved.

In practice, these systems are often deployed in environments that were never designed for always-on agents. Personal machines double as work devices. BYOD setups blur the boundary between individual experimentation and access to work-related data. In those contexts, an agent’s reach can extend well beyond what the user initially intends.

For others, the friction is not just technical. Expectations of out-of-the-box reliability often collide with the realities of deploying autonomous systems that require ongoing oversight and constraint.

Treating systems like Clawdbot as production infrastructure rather than experimental software is often what separates controlled use from unintended behavior, even when deployment begins as a personal experiment.

Closing Perspective

Personal AI systems are evolving toward agents that operate continuously within active workflows and act on behalf of users in real time. Clawdbot provides an early example of that direction.

And while Clawdbot could be dismissed as another tool enjoying its 15 minutes of fame before the hype fades, the broader reality is harder to ignore. For those skeptical of agentic AI, one thing is increasingly clear: AI agents are here to stay. They are becoming more deeply embedded in personal and organizational systems, not as novelties, but as infrastructure.

As autonomous systems move closer to production workflows, the need for visibility, policy enforcement, and real-time control becomes foundational. Learn more about how Prompt Security can help.

P.S. Clawdbot’s own messaging reflects a broader shift toward execution-first systems, where review follows action rather than preceding it.

.png)

.png)

.png)

.png)

.png)

.png)

.png)